In the span of a just a few months, ChatGPT, an advanced conversational artificial intelligence language model, has gained over 200 million users worldwide. The artificial intelligence tool has not only catapulted its user base but the public conversation about the future of generative AI and its impacts on the world. Its release has been heralded as a “Promethean moment” – a technology so consequential that it will alter the trajectory of humanity.

Given its rapid development and far-reaching impacts, many, including its creators and developers, have called for urgent regulation of artificial intelligence. Sam Altman, the CEO of OpenAI which developed ChatGPT, has acknowledged the possibility of existential risk and fronted the US Congress and practically pleaded for legislative oversight. Microsoft President Brad Smith has proposed that the US government should create a new agency to license artificial intelligence systems. Some have gone further. A letter signed by over a thousand tech leaders, including many AI pioneers, called for a moratorium on the development of advanced AI systems, calling them a “profound risk to society and humanity”.

ChatGPT, and similar generative AI, can create and generate human like responses based on text prompts input by the user. It can “chat” or respond because it has learned and then mimics language patterns and information from existing data to create responses or new content.

Given its capabilities and the urgent need for regulation, I thought maybe the best use of ChatGPT would be to help us articulate how governments can regulate it and similar systems. So, I asked it, “Can you offer policy recommendations to governments on how it can best regulate generative AI to help protect humanity and preserve democracy?”

No doubt programmed to articulate the views of its founders, here’s what it had to say.

Certainly! Here are some policy recommendations for governments to consider when regulating generative AI to protect humans and preserve democracy:

- Transparency and Disclosure: Governments should require clear disclosure when users are interacting with generative AI systems. Users should be informed that they are not engaging with a human, maintaining transparency and preventing the spread of misinformation or manipulation.

- Regulation of Malicious Use: Governments should enact regulations to prevent the malicious use of generative AI systems, such as deepfakes or AI-generated propaganda. Guidelines can be established to define the boundaries and acceptable uses of generative AI in areas such as politics, journalism, and public discourse.

- User Privacy and Data Protection: Governments should implement comprehensive data protection regulations to safeguard user privacy and prevent unauthorized access or misuse of personal data. Generative AI systems should adhere to strict privacy standards, and user consent should be obtained for data collection and usage.

- Accountability and Liability: Governments should establish frameworks to hold developers and deployers of generative AI systems accountable for their actions. Clear guidelines should be set for identifying responsibility and liability in cases of misuse or harm caused by AI-generated content.

- Auditing and Validation: Governments can require regular audits and validation processes for generative AI systems. Independent assessments can evaluate the fairness, biases, and ethical considerations of AI models to ensure they align with democratic values and human rights.

- Addressing Bias and Fairness: Governments should encourage AI developers to address biases in training data and algorithms. Guidelines and standards should be developed to ensure fairness, transparency, and accountability in the development and deployment of generative AI systems.

- Digital Literacy and Awareness: Governments should invest in educational programs to enhance digital literacy and raise awareness about the capabilities and limitations of generative AI systems. This empowers individuals to critically evaluate AI-generated content and safeguards against manipulation.

- International Collaboration: Given the global nature of generative AI, governments should collaborate internationally to establish common standards and regulations. Cooperation can help prevent regulatory arbitrage and address cross-border challenges related to generative AI.

- Ethical Guidelines and Human Rights: Governments should encourage the development and adoption of ethical guidelines specifically tailored to generative AI. These guidelines should align with democratic principles, protect human rights, and prohibit the use of AI systems for malicious purposes.

- Ongoing Evaluation and Adaptation: Governments should establish mechanisms for continuous evaluation, monitoring, and adaptation of regulations. The rapidly evolving nature of generative AI requires dynamic policies that can keep pace with technological advancements and emerging risks.

It is crucial for governments to strike a balance between regulation and innovation, ensuring the responsible development and deployment of generative AI while preserving democratic values and protecting human rights. Collaborative approaches involving AI developers, civil society organisations, academia, and international bodies can help shape effective and inclusive policies.

This sounds like a pretty good start and a convincing articulation of what we need to do to get AI under control. It’s as clear and convincing as any policy memo I’ve ever written. Maybe, dare I admit, more so?

But these policy recommendations are coming from a tool that, when I asked it to generate a bio note about me, a relatively simple task and already available on the internet, came up with a garbled string of inaccurate information nevertheless written in a convincing human tone. It said I was born in July (not true), in Melbourne Australia (not true), that I had degrees from UC Berkley and Harvard University (also not true), that I began my career at CSIS (also no), that I grew up in a family that “encouraged open dialogue” (not exactly), and that I had “exceptional capabilities” and made “extensive contributions” (well, a broken clock is right at least twice a day). Every time I asked it to regenerate a response, it came up with a less accurate biography using even more flattering language.

This made me consider my initial request in a new light. Systems such as ChatGPT are generative, meaning that they continue to “learn” and improve its copy from whatever data is input into them. But the content these systems generate has no grounding in reality; they are unable to assess whether the content they create is true or false let alone display reasoning or discernment. This will no doubt improve with time, and probably rapidly so, but AI developers haven’t managed to overcome what’s been called the hallucination problem – and it’s not clear if and how they will.

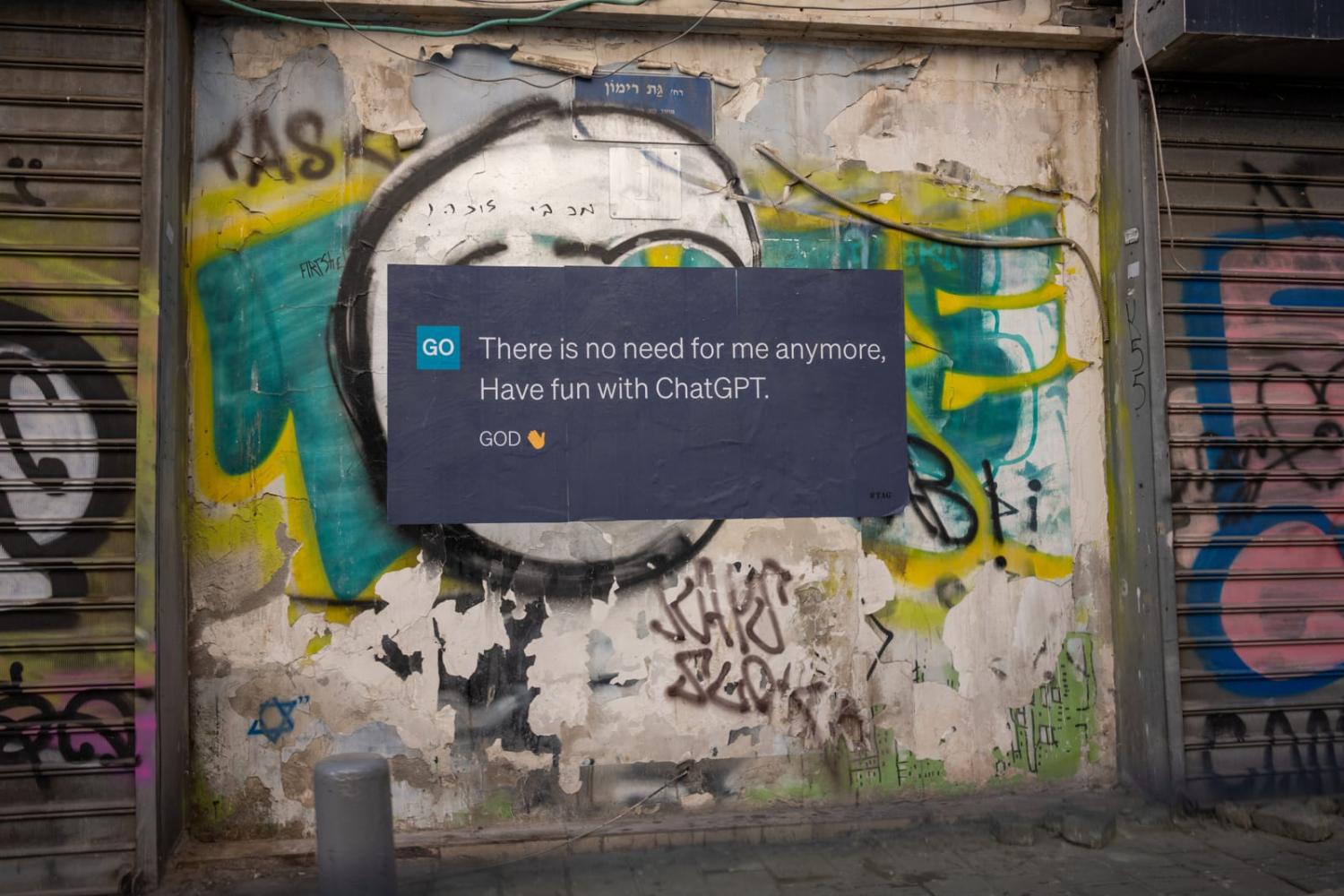

If the AI is hallucinating, then maybe those creators calling for regulation while already unleashing this enormously consequential technology are too.